lars_path#

- sklearn.linear_model.lars_path(X, y, Xy=None, *, Gram=None, max_iter=500, alpha_min=0, method='lar', copy_X=True, eps=np.float64(2.220446049250313e-16), copy_Gram=True, verbose=0, return_path=True, return_n_iter=False, positive=False)[source]#

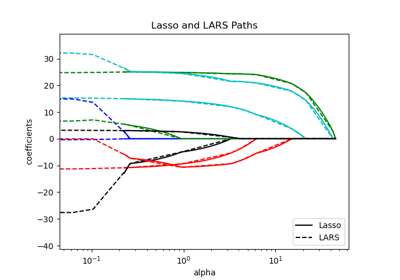

Compute Least Angle Regression or Lasso path using the LARS algorithm.

The optimization objective for the case method=’lasso’ is:

(1 / (2 * n_samples)) * ||y - Xw||^2_2 + alpha * ||w||_1

in the case of method=’lar’, the objective function is only known in the form of an implicit equation (see discussion in [1]).

Read more in the User Guide.

- Parameters:

- XNone or ndarray of shape (n_samples, n_features)

Input data. If X is

None, Gram must also beNone. If only the Gram matrix is available, uselars_path_graminstead.- yNone or ndarray of shape (n_samples,)

Input targets.

- Xyarray-like of shape (n_features,), default=None

Xy = X.T @ ythat can be precomputed. It is useful only when the Gram matrix is precomputed.- GramNone, ‘auto’, bool, ndarray of shape (n_features, n_features), default=None

Precomputed Gram matrix

X.T @ X, if'auto', the Gram matrix is precomputed from the given X, if there are more samples than features.- max_iterint, default=500

Maximum number of iterations to perform, set to infinity for no limit.

- alpha_minfloat, default=0

Minimum correlation along the path. It corresponds to the regularization parameter

alphain the Lasso.- method{‘lar’, ‘lasso’}, default=’lar’

Specifies the returned model. Select

'lar'for Least Angle Regression,'lasso'for the Lasso.- copy_Xbool, default=True

If

False,Xis overwritten.- epsfloat, default=np.finfo(float).eps

The machine-precision regularization in the computation of the Cholesky diagonal factors. Increase this for very ill-conditioned systems. Unlike the

tolparameter in some iterative optimization-based algorithms, this parameter does not control the tolerance of the optimization.- copy_Grambool, default=True

If

False,Gramis overwritten.- verboseint, default=0

Controls output verbosity.

- return_pathbool, default=True

If

True, returns the entire path, else returns only the last point of the path.- return_n_iterbool, default=False

Whether to return the number of iterations.

- positivebool, default=False

Restrict coefficients to be >= 0. This option is only allowed with method ‘lasso’. Note that the model coefficients will not converge to the ordinary-least-squares solution for small values of alpha. Only coefficients up to the smallest alpha value (

alphas_[alphas_ > 0.].min()when fit_path=True) reached by the stepwise Lars-Lasso algorithm are typically in congruence with the solution of the coordinate descentlasso_pathfunction.

- Returns:

- alphasndarray of shape (n_alphas + 1,)

Maximum of covariances (in absolute value) at each iteration.

n_alphasis eithermax_iter,n_features, or the number of nodes in the path withalpha >= alpha_min, whichever is smaller.- activendarray of shape (n_alphas,)

Indices of active variables at the end of the path.

- coefsndarray of shape (n_features, n_alphas + 1)

Coefficients along the path.

- n_iterint

Number of iterations run. Returned only if

return_n_iteris set to True.

See also

lars_path_gramCompute LARS path in the sufficient stats mode.

lasso_pathCompute Lasso path with coordinate descent.

LassoLarsLasso model fit with Least Angle Regression a.k.a. Lars.

LarsLeast Angle Regression model a.k.a. LAR.

LassoLarsCVCross-validated Lasso, using the LARS algorithm.

LarsCVCross-validated Least Angle Regression model.

sklearn.decomposition.sparse_encodeSparse coding.

References

[1]“Least Angle Regression”, Efron et al. http://statweb.stanford.edu/~tibs/ftp/lars.pdf

Examples

>>> from sklearn.linear_model import lars_path >>> from sklearn.datasets import make_regression >>> X, y, true_coef = make_regression( ... n_samples=100, n_features=5, n_informative=2, coef=True, random_state=0 ... ) >>> true_coef array([ 0. , 0. , 0. , 97.9, 45.7]) >>> alphas, _, estimated_coef = lars_path(X, y) >>> alphas.shape (3,) >>> estimated_coef array([[ 0. , 0. , 0. ], [ 0. , 0. , 0. ], [ 0. , 0. , 0. ], [ 0. , 46.96, 97.99], [ 0. , 0. , 45.70]])