HDBSCAN#

- class sklearn.cluster.HDBSCAN(min_cluster_size=5, min_samples=None, cluster_selection_epsilon=0.0, max_cluster_size=None, metric='euclidean', metric_params=None, alpha=1.0, algorithm='auto', leaf_size=40, n_jobs=None, cluster_selection_method='eom', allow_single_cluster=False, store_centers=None, copy='warn')[source]#

Cluster data using hierarchical density-based clustering.

HDBSCAN - Hierarchical Density-Based Spatial Clustering of Applications with Noise. Performs

DBSCANover varying epsilon values and integrates the result to find a clustering that gives the best stability over epsilon. This allows HDBSCAN to find clusters of varying densities (unlikeDBSCAN), and be more robust to parameter selection. Read more in the User Guide.Added in version 1.3.

- Parameters:

- min_cluster_sizeint, default=5

The minimum number of samples in a group for that group to be considered a cluster; groupings smaller than this size will be left as noise.

- min_samplesint, default=None

The parameter

kused to calculate the distance between a pointx_pand its k-th nearest neighbor. WhenNone, defaults tomin_cluster_size.- cluster_selection_epsilonfloat, default=0.0

A distance threshold. Clusters below this value will be merged. See [5] for more information.

- max_cluster_sizeint, default=None

A limit to the size of clusters returned by the

"eom"cluster selection algorithm. There is no limit whenmax_cluster_size=None. Has no effect ifcluster_selection_method="leaf".- metricstr or callable, default=’euclidean’

The metric to use when calculating distance between instances in a feature array.

If metric is a string or callable, it must be one of the options allowed by

pairwise_distancesfor its metric parameter.If metric is “precomputed”, X is assumed to be a distance matrix and must be square.

- metric_paramsdict, default=None

Arguments passed to the distance metric.

- alphafloat, default=1.0

A distance scaling parameter as used in robust single linkage. See [3] for more information.

- algorithm{“auto”, “brute”, “kd_tree”, “ball_tree”}, default=”auto”

Exactly which algorithm to use for computing core distances; By default this is set to

"auto"which attempts to use aKDTreetree if possible, otherwise it uses aBallTreetree. Both"kd_tree"and"ball_tree"algorithms use theNearestNeighborsestimator.If the

Xpassed duringfitis sparse ormetricis invalid for bothKDTreeandBallTree, then it resolves to use the"brute"algorithm.- leaf_sizeint, default=40

Leaf size for trees responsible for fast nearest neighbour queries when a KDTree or a BallTree are used as core-distance algorithms. A large dataset size and small

leaf_sizemay induce excessive memory usage. If you are running out of memory consider increasing theleaf_sizeparameter. Ignored foralgorithm="brute".- n_jobsint, default=None

Number of jobs to run in parallel to calculate distances.

Nonemeans 1 unless in ajoblib.parallel_backendcontext.-1means using all processors. See Glossary for more details.- cluster_selection_method{“eom”, “leaf”}, default=”eom”

The method used to select clusters from the condensed tree. The standard approach for HDBSCAN* is to use an Excess of Mass (

"eom") algorithm to find the most persistent clusters. Alternatively you can instead select the clusters at the leaves of the tree – this provides the most fine grained and homogeneous clusters.- allow_single_clusterbool, default=False

By default HDBSCAN* will not produce a single cluster, setting this to True will override this and allow single cluster results in the case that you feel this is a valid result for your dataset.

- store_centersstr, default=None

Which, if any, cluster centers to compute and store. The options are:

Nonewhich does not compute nor store any centers."centroid"which calculates the center by taking the weighted average of their positions. Note that the algorithm uses the euclidean metric and does not guarantee that the output will be an observed data point."medoid"which calculates the center by taking the point in the fitted data which minimizes the distance to all other points in the cluster. This is slower than “centroid” since it requires computing additional pairwise distances between points of the same cluster but guarantees the output is an observed data point. The medoid is also well-defined for arbitrary metrics, and does not depend on a euclidean metric."both"which computes and stores both forms of centers.

- copybool, default=False

If

copy=Truethen any time an in-place modifications would be made that would overwrite data passed to fit, a copy will first be made, guaranteeing that the original data will be unchanged. Currently, it only applies whenmetric="precomputed", when passing a dense array or a CSR sparse matrix and whenalgorithm="brute".Changed in version 1.10: The default value for

copywill change fromFalsetoTruein version 1.10.

- Attributes:

- labels_ndarray of shape (n_samples,)

Cluster labels for each point in the dataset given to fit. Outliers are labeled as follows:

Noisy samples are given the label -1.

Samples with infinite elements (+/- np.inf) are given the label -2.

Samples with missing data are given the label -3, even if they also have infinite elements.

- probabilities_ndarray of shape (n_samples,)

The strength with which each sample is a member of its assigned cluster.

Clustered samples have probabilities proportional to the degree that they persist as part of the cluster.

Noisy samples have probability zero.

Samples with infinite elements (+/- np.inf) have probability 0.

Samples with missing data have probability

np.nan.

- n_features_in_int

Number of features seen during fit.

- feature_names_in_ndarray of shape (

n_features_in_,) Names of features seen during fit. Defined only when

Xhas feature names that are all strings.- centroids_ndarray of shape (n_clusters, n_features)

A collection containing the centroid of each cluster calculated under the standard euclidean metric. The centroids may fall “outside” their respective clusters if the clusters themselves are non-convex.

Note that

n_clustersonly counts non-outlier clusters. That is to say, the-1, -2, -3labels for the outlier clusters are excluded.- medoids_ndarray of shape (n_clusters, n_features)

A collection containing the medoid of each cluster calculated under the whichever metric was passed to the

metricparameter. The medoids are points in the original cluster which minimize the average distance to all other points in that cluster under the chosen metric. These can be thought of as the result of projecting themetric-based centroid back onto the cluster.Note that

n_clustersonly counts non-outlier clusters. That is to say, the-1, -2, -3labels for the outlier clusters are excluded.

See also

Notes

The

min_samplesparameter includes the point itself, whereas the implementation in scikit-learn-contrib/hdbscan does not. To get the same results in both versions, the value ofmin_sampleshere must be 1 greater than the value used in scikit-learn-contrib/hdbscan.References

Examples

>>> import numpy as np >>> from sklearn.cluster import HDBSCAN >>> from sklearn.datasets import load_digits >>> X, _ = load_digits(return_X_y=True) >>> hdb = HDBSCAN(copy=True, min_cluster_size=20) >>> hdb.fit(X) HDBSCAN(copy=True, min_cluster_size=20) >>> hdb.labels_.shape == (X.shape[0],) True >>> np.unique(hdb.labels_).tolist() [-1, 0, 1, 2, 3, 4, 5, 6, 7]

- dbscan_clustering(cut_distance, min_cluster_size=5)[source]#

Return clustering given by DBSCAN without border points.

Return clustering that would be equivalent to running DBSCAN* for a particular cut_distance (or epsilon) DBSCAN* can be thought of as DBSCAN without the border points. As such these results may differ slightly from

cluster.DBSCANdue to the difference in implementation over the non-core points.This can also be thought of as a flat clustering derived from constant height cut through the single linkage tree.

This represents the result of selecting a cut value for robust single linkage clustering. The

min_cluster_sizeallows the flat clustering to declare noise points (and cluster smaller thanmin_cluster_size).- Parameters:

- cut_distancefloat

The mutual reachability distance cut value to use to generate a flat clustering.

- min_cluster_sizeint, default=5

Clusters smaller than this value with be called ‘noise’ and remain unclustered in the resulting flat clustering.

- Returns:

- labelsndarray of shape (n_samples,)

An array of cluster labels, one per datapoint. Outliers are labeled as follows:

Noisy samples are given the label -1.

Samples with infinite elements (+/- np.inf) are given the label -2.

Samples with missing data are given the label -3, even if they also have infinite elements.

- fit(X, y=None)[source]#

Find clusters based on hierarchical density-based clustering.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features), or ndarray of shape (n_samples, n_samples)

A feature array, or array of distances between samples if

metric='precomputed'.- yNone

Ignored.

- Returns:

- selfobject

Returns self.

- fit_predict(X, y=None)[source]#

Cluster X and return the associated cluster labels.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features), or ndarray of shape (n_samples, n_samples)

A feature array, or array of distances between samples if

metric='precomputed'.- yNone

Ignored.

- Returns:

- yndarray of shape (n_samples,)

Cluster labels.

- get_metadata_routing()[source]#

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

- routingMetadataRequest

A

MetadataRequestencapsulating routing information.

- get_params(deep=True)[source]#

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- set_params(**params)[source]#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.

Gallery examples#

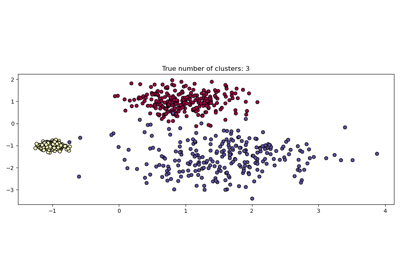

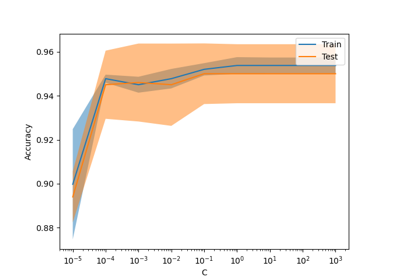

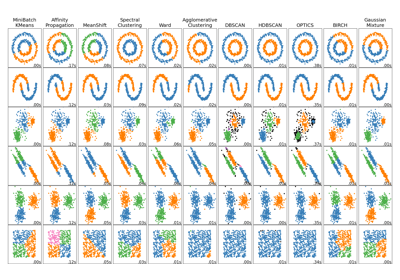

Comparing different clustering algorithms on toy datasets