l1_min_c#

- sklearn.svm.l1_min_c(X, y, *, loss='squared_hinge', fit_intercept=True, intercept_scaling=1.0)[source]#

Return the lowest bound for

C.The lower bound for

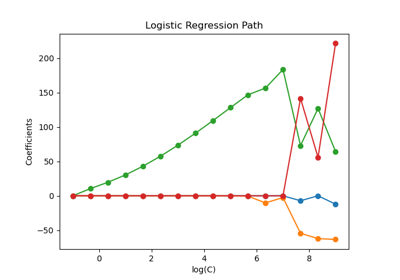

Cis computed such that forCin(l1_min_C, infinity)the model is guaranteed not to be empty. This applies to l1 penalized classifiers, such assklearn.svm.LinearSVCwith penalty=’l1’ andsklearn.linear_model.LogisticRegressionwithl1_ratio=1.This value is valid if

class_weightparameter infit()is not set.For an example of how to use this function, see Regularization path of L1- Logistic Regression.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Training vector, where

n_samplesis the number of samples andn_featuresis the number of features.- yarray-like of shape (n_samples,)

Target vector relative to X.

- loss{‘squared_hinge’, ‘log’}, default=’squared_hinge’

Specifies the loss function. With ‘squared_hinge’ it is the squared hinge loss (a.k.a. L2 loss). With ‘log’ it is the loss of logistic regression models.

- fit_interceptbool, default=True

Specifies if the intercept should be fitted by the model. It must match the fit() method parameter.

- intercept_scalingfloat, default=1.0

When fit_intercept is True, instance vector x becomes [x, intercept_scaling], i.e. a “synthetic” feature with constant value equals to intercept_scaling is appended to the instance vector. It must match the fit() method parameter.

- Returns:

- l1_min_cfloat

Minimum value for C.

Examples

>>> from sklearn.svm import l1_min_c >>> from sklearn.datasets import make_classification >>> X, y = make_classification(n_samples=100, n_features=20, random_state=42) >>> print(f"{l1_min_c(X, y, loss='squared_hinge', fit_intercept=True):.4f}") 0.0044