Note

Go to the end to download the full example code or to run this example in your browser via JupyterLite or Binder.

Examples of Using FrozenEstimator#

This example showcases some use cases of FrozenEstimator.

FrozenEstimator is a utility class that allows to freeze a

fitted estimator. This is useful, for instance, when we want to pass a fitted estimator

to a meta-estimator, such as FixedThresholdClassifier

without letting the meta-estimator refit the estimator.

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

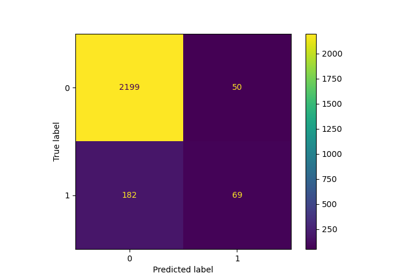

Setting a decision threshold for a pre-fitted classifier#

Fitted classifiers in scikit-learn use an arbitrary decision threshold to decide

which class the given sample belongs to. The decision threshold is either 0.0 on the

value returned by decision_function, or 0.5 on the probability returned by

predict_proba.

However, one might want to set a custom decision threshold. We can do this by

using FixedThresholdClassifier and wrapping the

classifier with FrozenEstimator.

from sklearn.datasets import make_classification

from sklearn.frozen import FrozenEstimator

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import FixedThresholdClassifier, train_test_split

X, y = make_classification(n_samples=1000, random_state=0)

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0)

classifier = LogisticRegression().fit(X_train, y_train)

print(

"Probability estimates for three data points:\n"

f"{classifier.predict_proba(X_test[-3:]).round(3)}"

)

print(

"Predicted class for the same three data points:\n"

f"{classifier.predict(X_test[-3:])}"

)

Probability estimates for three data points:

[[0.18 0.82]

[0.29 0.71]

[0. 1. ]]

Predicted class for the same three data points:

[1 1 1]

Now imagine you’d want to set a different decision threshold on the probability

estimates. We can do this by wrapping the classifier with

FrozenEstimator and passing it to

FixedThresholdClassifier.

threshold_classifier = FixedThresholdClassifier(

estimator=FrozenEstimator(classifier), threshold=0.9

)

Note that in the above piece of code, calling fit on

FixedThresholdClassifier does not refit the

underlying classifier.

Now, let’s see how the predictions changed with respect to the probability threshold.

print(

"Probability estimates for three data points with FixedThresholdClassifier:\n"

f"{threshold_classifier.predict_proba(X_test[-3:]).round(3)}"

)

print(

"Predicted class for the same three data points with FixedThresholdClassifier:\n"

f"{threshold_classifier.predict(X_test[-3:])}"

)

Probability estimates for three data points with FixedThresholdClassifier:

[[0.18 0.82]

[0.29 0.71]

[0. 1. ]]

Predicted class for the same three data points with FixedThresholdClassifier:

[0 0 1]

We see that the probability estimates stay the same, but since a different decision threshold is used, the predicted classes are different.

Please refer to Post-tuning the decision threshold for cost-sensitive learning to learn about cost-sensitive learning and decision threshold tuning.

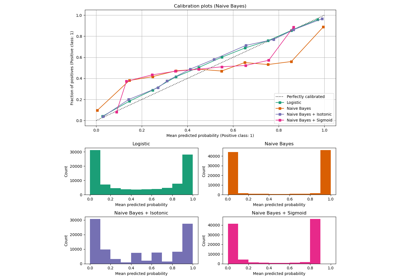

Calibration of a pre-fitted classifier#

You can use FrozenEstimator to calibrate a pre-fitted

classifier using CalibratedClassifierCV.

from sklearn.calibration import CalibratedClassifierCV

from sklearn.metrics import brier_score_loss

calibrated_classifier = CalibratedClassifierCV(

estimator=FrozenEstimator(classifier)

).fit(X_train, y_train)

prob_pos_clf = classifier.predict_proba(X_test)[:, 1]

clf_score = brier_score_loss(y_test, prob_pos_clf)

print(f"No calibration: {clf_score:.3f}")

prob_pos_calibrated = calibrated_classifier.predict_proba(X_test)[:, 1]

calibrated_score = brier_score_loss(y_test, prob_pos_calibrated)

print(f"With calibration: {calibrated_score:.3f}")

No calibration: 0.033

With calibration: 0.032

Total running time of the script: (0 minutes 0.017 seconds)

Related examples

Post-hoc tuning the cut-off point of decision function