Note

Go to the end to download the full example code or to run this example in your browser via JupyterLite or Binder.

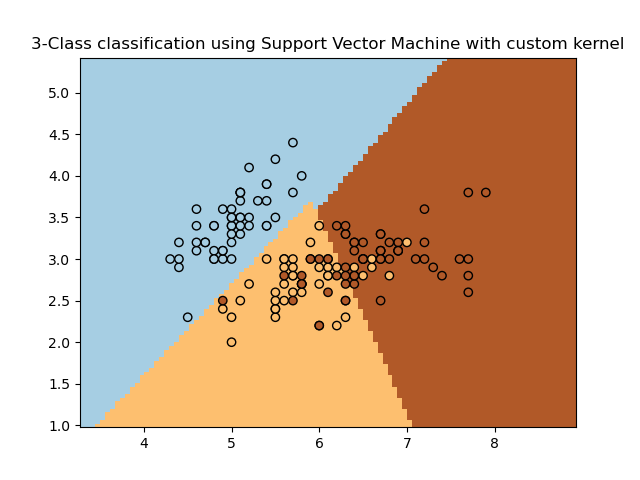

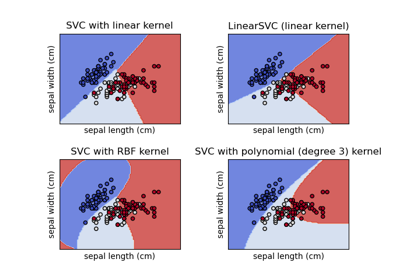

SVM with custom kernel#

Simple usage of Support Vector Machines to classify a sample. It will plot the decision surface and the support vectors.

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

import matplotlib.pyplot as plt

import numpy as np

from sklearn import datasets, svm

from sklearn.inspection import DecisionBoundaryDisplay

# import some data to play with

iris = datasets.load_iris()

X = iris.data[:, :2] # we only take the first two features. We could

# avoid this ugly slicing by using a two-dim dataset

Y = iris.target

def my_kernel(X, Y):

"""

We create a custom kernel:

(2 0)

k(X, Y) = X ( ) Y.T

(0 1)

"""

M = np.array([[2, 0], [0, 1.0]])

return np.dot(np.dot(X, M), Y.T)

h = 0.02 # step size in the mesh

# we create an instance of SVM and fit out data.

clf = svm.SVC(kernel=my_kernel)

clf.fit(X, Y)

ax = plt.gca()

DecisionBoundaryDisplay.from_estimator(

clf,

X,

cmap=plt.cm.Paired,

ax=ax,

response_method="predict",

plot_method="pcolormesh",

shading="auto",

)

# Plot also the training points

plt.scatter(X[:, 0], X[:, 1], c=Y, cmap=plt.cm.Paired, edgecolors="k")

plt.title("3-Class classification using Support Vector Machine with custom kernel")

plt.axis("tight")

plt.show()

Total running time of the script: (0 minutes 0.077 seconds)

Related examples

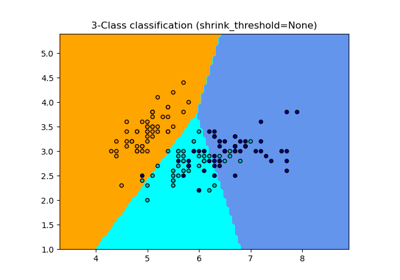

Plot different SVM classifiers in the iris dataset

Plot different SVM classifiers in the iris dataset