make_scorer#

- sklearn.metrics.make_scorer(score_func, *, response_method='predict', greater_is_better=True, **kwargs)[source]#

Make a scorer from a performance metric or loss function.

A scorer is a wrapper around an arbitrary metric or loss function that is called with the signature

scorer(estimator, X, y_true, **kwargs).It is accepted in all scikit-learn estimators or functions allowing a

scoringparameter.The parameter

response_methodallows to specify which method of the estimator should be used to feed the scoring/loss function.Read more in the User Guide.

- Parameters:

- score_funccallable

Score function (or loss function) with signature

score_func(y, y_pred, **kwargs).- response_method{“predict_proba”, “decision_function”, “predict”} or list/tuple of such str, default=”predict”

Specifies the response method to use get prediction from an estimator (i.e. predict_proba, decision_function or predict). Possible choices are:

if

str, it corresponds to the name to the method to return;if a list or tuple of

str, it provides the method names in order of preference. The method returned corresponds to the first method in the list and which is implemented byestimator.

Added in version 1.4.

- greater_is_betterbool, default=True

Whether

score_funcis a score function (default), meaning high is good, or a loss function, meaning low is good. In the latter case, the scorer object will sign-flip the outcome of thescore_func.- **kwargsadditional arguments

Additional parameters to be passed to

score_func.

- Returns:

- scorercallable

Callable object that returns a scalar score; greater is better.

Examples

>>> from sklearn.metrics import fbeta_score, make_scorer >>> ftwo_scorer = make_scorer(fbeta_score, beta=2) >>> ftwo_scorer make_scorer(fbeta_score, response_method='predict', beta=2) >>> from sklearn.model_selection import GridSearchCV >>> from sklearn.svm import LinearSVC >>> grid = GridSearchCV(LinearSVC(), param_grid={'C': [1, 10]}, ... scoring=ftwo_scorer)

Gallery examples#

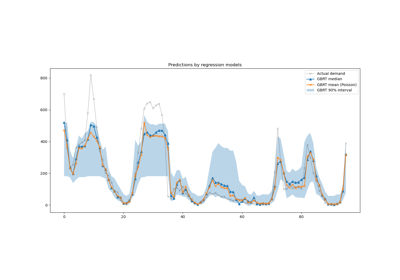

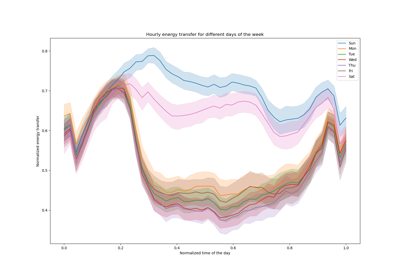

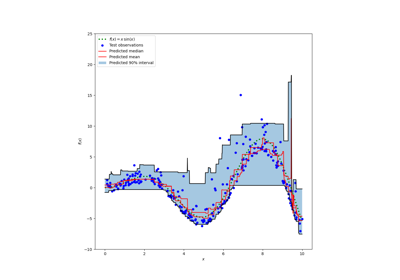

Prediction Intervals for Gradient Boosting Regression

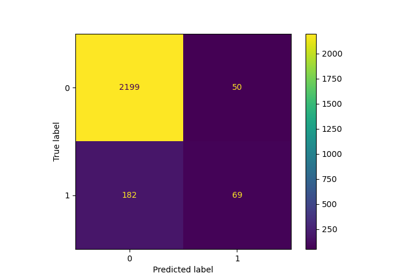

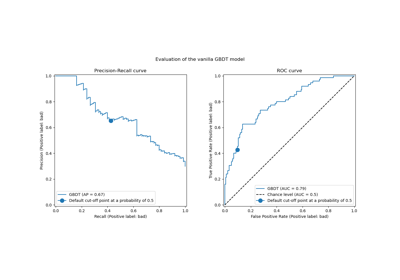

Post-tuning the decision threshold for cost-sensitive learning

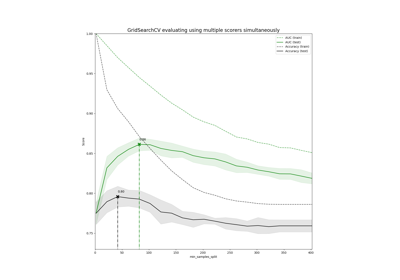

Demonstration of multi-metric evaluation on cross_val_score and GridSearchCV