sklearn.metrics.make_scorer¶

- sklearn.metrics.make_scorer(score_func, *, greater_is_better=True, needs_proba=False, needs_threshold=False, **kwargs)[source]¶

Make a scorer from a performance metric or loss function.

This factory function wraps scoring functions for use in

GridSearchCVandcross_val_score. It takes a score function, such asaccuracy_score,mean_squared_error,adjusted_rand_scoreoraverage_precision_scoreand returns a callable that scores an estimator’s output. The signature of the call is(estimator, X, y)whereestimatoris the model to be evaluated,Xis the data andyis the ground truth labeling (orNonein the case of unsupervised models).Read more in the User Guide.

- Parameters:

- score_funccallable

Score function (or loss function) with signature

score_func(y, y_pred, **kwargs).- greater_is_betterbool, default=True

Whether

score_funcis a score function (default), meaning high is good, or a loss function, meaning low is good. In the latter case, the scorer object will sign-flip the outcome of thescore_func.- needs_probabool, default=False

Whether

score_funcrequirespredict_probato get probability estimates out of a classifier.If True, for binary

y_true, the score function is supposed to accept a 1Dy_pred(i.e., probability of the positive class, shape(n_samples,)).- needs_thresholdbool, default=False

Whether

score_functakes a continuous decision certainty. This only works for binary classification using estimators that have either adecision_functionorpredict_probamethod.If True, for binary

y_true, the score function is supposed to accept a 1Dy_pred(i.e., probability of the positive class or the decision function, shape(n_samples,)).For example

average_precisionor the area under the roc curve can not be computed using discrete predictions alone.- **kwargsadditional arguments

Additional parameters to be passed to

score_func.

- Returns:

- scorercallable

Callable object that returns a scalar score; greater is better.

Notes

If

needs_proba=Falseandneeds_threshold=False, the score function is supposed to accept the output of predict. Ifneeds_proba=True, the score function is supposed to accept the output of predict_proba (For binaryy_true, the score function is supposed to accept probability of the positive class). Ifneeds_threshold=True, the score function is supposed to accept the output of decision_function or predict_proba when decision_function is not present.Examples

>>> from sklearn.metrics import fbeta_score, make_scorer >>> ftwo_scorer = make_scorer(fbeta_score, beta=2) >>> ftwo_scorer make_scorer(fbeta_score, beta=2) >>> from sklearn.model_selection import GridSearchCV >>> from sklearn.svm import LinearSVC >>> grid = GridSearchCV(LinearSVC(), param_grid={'C': [1, 10]}, ... scoring=ftwo_scorer)

Examples using sklearn.metrics.make_scorer¶

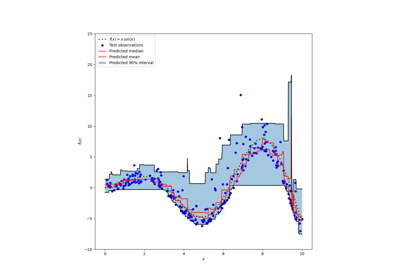

Prediction Intervals for Gradient Boosting Regression

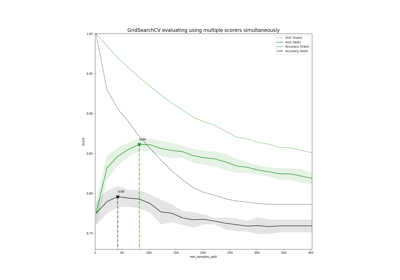

Demonstration of multi-metric evaluation on cross_val_score and GridSearchCV