precision_recall_curve#

- sklearn.metrics.precision_recall_curve(y_true, y_score=None, *, pos_label=None, sample_weight=None, drop_intermediate=False, probas_pred='deprecated')[source]#

Compute precision-recall pairs for different probability thresholds.

Note: this implementation is restricted to the binary classification task.

The precision is the ratio

tp / (tp + fp)wheretpis the number of true positives andfpthe number of false positives. The precision is intuitively the ability of the classifier not to label as positive a sample that is negative.The recall is the ratio

tp / (tp + fn)wheretpis the number of true positives andfnthe number of false negatives. The recall is intuitively the ability of the classifier to find all the positive samples.The last precision and recall values are 1. and 0. respectively and do not have a corresponding threshold. This ensures that the graph starts on the y axis.

The first precision and recall values are precision=class balance and recall=1.0 which corresponds to a classifier that always predicts the positive class.

Read more in the User Guide.

- Parameters:

- y_truearray-like of shape (n_samples,)

True binary labels. If labels are not either {-1, 1} or {0, 1}, then pos_label should be explicitly given.

- y_scorearray-like of shape (n_samples,)

Target scores, can either be probability estimates of the positive class, or non-thresholded measure of decisions (as returned by

decision_functionon some classifiers). For decision_function scores, values greater than or equal to zero should indicate the positive class.- pos_labelint, float, bool or str, default=None

The label of the positive class. When

pos_label=None, if y_true is in {-1, 1} or {0, 1},pos_labelis set to 1, otherwise an error will be raised.- sample_weightarray-like of shape (n_samples,), default=None

Sample weights.

- drop_intermediatebool, default=False

Whether to drop some suboptimal thresholds which would not appear on a plotted precision-recall curve. This is useful in order to create lighter precision-recall curves.

Added in version 1.3.

- probas_predarray-like of shape (n_samples,)

Target scores, can either be probability estimates of the positive class, or non-thresholded measure of decisions (as returned by

decision_functionon some classifiers).Deprecated since version 1.5:

probas_predis deprecated and will be removed in 1.7. Usey_scoreinstead.

- Returns:

- precisionndarray of shape (n_thresholds + 1,)

Precision values such that element i is the precision of predictions with score >= thresholds[i] and the last element is 1.

- recallndarray of shape (n_thresholds + 1,)

Decreasing recall values such that element i is the recall of predictions with score >= thresholds[i] and the last element is 0.

- thresholdsndarray of shape (n_thresholds,)

Increasing thresholds on the decision function used to compute precision and recall where

n_thresholds = len(np.unique(probas_pred)).

See also

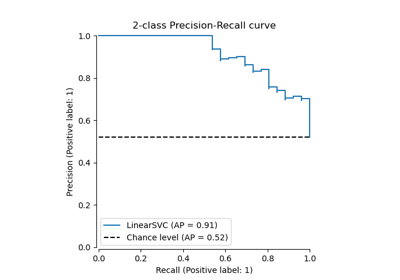

PrecisionRecallDisplay.from_estimatorPlot Precision Recall Curve given a binary classifier.

PrecisionRecallDisplay.from_predictionsPlot Precision Recall Curve using predictions from a binary classifier.

average_precision_scoreCompute average precision from prediction scores.

det_curveCompute error rates for different probability thresholds.

roc_curveCompute Receiver operating characteristic (ROC) curve.

Examples

>>> import numpy as np >>> from sklearn.metrics import precision_recall_curve >>> y_true = np.array([0, 0, 1, 1]) >>> y_scores = np.array([0.1, 0.4, 0.35, 0.8]) >>> precision, recall, thresholds = precision_recall_curve( ... y_true, y_scores) >>> precision array([0.5 , 0.66666667, 0.5 , 1. , 1. ]) >>> recall array([1. , 1. , 0.5, 0.5, 0. ]) >>> thresholds array([0.1 , 0.35, 0.4 , 0.8 ])