sklearn.neural_network.BernoulliRBM¶

-

class

sklearn.neural_network.BernoulliRBM(n_components=256, learning_rate=0.1, batch_size=10, n_iter=10, verbose=0, random_state=None)[source]¶ Bernoulli Restricted Boltzmann Machine (RBM).

A Restricted Boltzmann Machine with binary visible units and binary hidden units. Parameters are estimated using Stochastic Maximum Likelihood (SML), also known as Persistent Contrastive Divergence (PCD) [2].

The time complexity of this implementation is

O(d ** 2)assuming d ~ n_features ~ n_components.Read more in the User Guide.

- Parameters

- n_componentsint, default=256

Number of binary hidden units.

- learning_ratefloat, default=0.1

The learning rate for weight updates. It is highly recommended to tune this hyper-parameter. Reasonable values are in the 10**[0., -3.] range.

- batch_sizeint, default=10

Number of examples per minibatch.

- n_iterint, default=10

Number of iterations/sweeps over the training dataset to perform during training.

- verboseint, default=0

The verbosity level. The default, zero, means silent mode.

- random_stateinteger or RandomState, default=None

A random number generator instance to define the state of the random permutations generator. If an integer is given, it fixes the seed. Defaults to the global numpy random number generator.

- Attributes

- intercept_hidden_array-like, shape (n_components,)

Biases of the hidden units.

- intercept_visible_array-like, shape (n_features,)

Biases of the visible units.

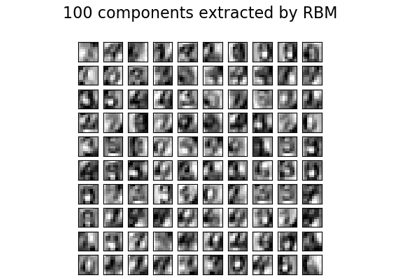

- components_array-like, shape (n_components, n_features)

Weight matrix, where n_features in the number of visible units and n_components is the number of hidden units.

- h_samples_array-like, shape (batch_size, n_components)

Hidden Activation sampled from the model distribution, where batch_size in the number of examples per minibatch and n_components is the number of hidden units.

References

- [1] Hinton, G. E., Osindero, S. and Teh, Y. A fast learning algorithm for

deep belief nets. Neural Computation 18, pp 1527-1554. https://www.cs.toronto.edu/~hinton/absps/fastnc.pdf

- [2] Tieleman, T. Training Restricted Boltzmann Machines using

Approximations to the Likelihood Gradient. International Conference on Machine Learning (ICML) 2008

Examples

>>> import numpy as np >>> from sklearn.neural_network import BernoulliRBM >>> X = np.array([[0, 0, 0], [0, 1, 1], [1, 0, 1], [1, 1, 1]]) >>> model = BernoulliRBM(n_components=2) >>> model.fit(X) BernoulliRBM(n_components=2)

Methods

fit(self, X[, y])Fit the model to the data X.

fit_transform(self, X[, y])Fit to data, then transform it.

get_params(self[, deep])Get parameters for this estimator.

gibbs(self, v)Perform one Gibbs sampling step.

partial_fit(self, X[, y])Fit the model to the data X which should contain a partial segment of the data.

score_samples(self, X)Compute the pseudo-likelihood of X.

set_params(self, \*\*params)Set the parameters of this estimator.

transform(self, X)Compute the hidden layer activation probabilities, P(h=1|v=X).

-

__init__(self, n_components=256, learning_rate=0.1, batch_size=10, n_iter=10, verbose=0, random_state=None)[source]¶ Initialize self. See help(type(self)) for accurate signature.

-

fit(self, X, y=None)[source]¶ Fit the model to the data X.

- Parameters

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Training data.

- Returns

- selfBernoulliRBM

The fitted model.

-

fit_transform(self, X, y=None, **fit_params)[source]¶ Fit to data, then transform it.

Fits transformer to X and y with optional parameters fit_params and returns a transformed version of X.

- Parameters

- Xnumpy array of shape [n_samples, n_features]

Training set.

- ynumpy array of shape [n_samples]

Target values.

- **fit_paramsdict

Additional fit parameters.

- Returns

- X_newnumpy array of shape [n_samples, n_features_new]

Transformed array.

-

get_params(self, deep=True)[source]¶ Get parameters for this estimator.

- Parameters

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns

- paramsmapping of string to any

Parameter names mapped to their values.

-

gibbs(self, v)[source]¶ Perform one Gibbs sampling step.

- Parameters

- vndarray of shape (n_samples, n_features)

Values of the visible layer to start from.

- Returns

- v_newndarray of shape (n_samples, n_features)

Values of the visible layer after one Gibbs step.

-

partial_fit(self, X, y=None)[source]¶ Fit the model to the data X which should contain a partial segment of the data.

- Parameters

- Xndarray of shape (n_samples, n_features)

Training data.

- Returns

- selfBernoulliRBM

The fitted model.

-

score_samples(self, X)[source]¶ Compute the pseudo-likelihood of X.

- Parameters

- X{array-like, sparse matrix} of shape (n_samples, n_features)

Values of the visible layer. Must be all-boolean (not checked).

- Returns

- pseudo_likelihoodndarray of shape (n_samples,)

Value of the pseudo-likelihood (proxy for likelihood).

Notes

This method is not deterministic: it computes a quantity called the free energy on X, then on a randomly corrupted version of X, and returns the log of the logistic function of the difference.

-

set_params(self, **params)[source]¶ Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as pipelines). The latter have parameters of the form

<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters

- **paramsdict

Estimator parameters.

- Returns

- selfobject

Estimator instance.