Note

Click here to download the full example code or to run this example in your browser via Binder

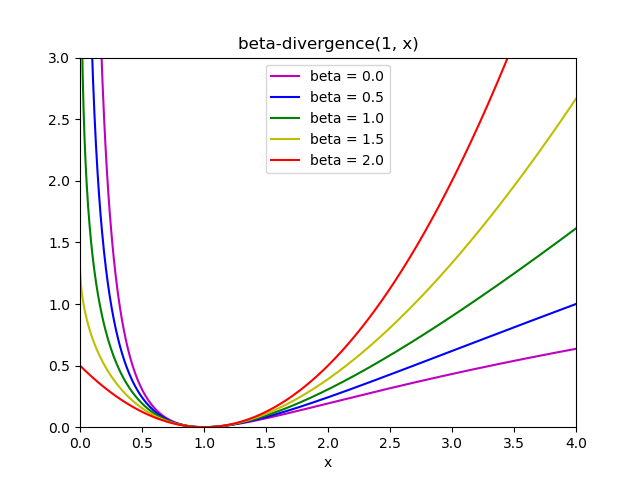

Beta-divergence loss functions¶

A plot that compares the various Beta-divergence loss functions supported by

the Multiplicative-Update (‘mu’) solver in sklearn.decomposition.NMF.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition._nmf import _beta_divergence

print(__doc__)

x = np.linspace(0.001, 4, 1000)

y = np.zeros(x.shape)

colors = 'mbgyr'

for j, beta in enumerate((0., 0.5, 1., 1.5, 2.)):

for i, xi in enumerate(x):

y[i] = _beta_divergence(1, xi, 1, beta)

name = "beta = %1.1f" % beta

plt.plot(x, y, label=name, color=colors[j])

plt.xlabel("x")

plt.title("beta-divergence(1, x)")

plt.legend(loc=0)

plt.axis([0, 4, 0, 3])

plt.show()

Total running time of the script: ( 0 minutes 0.422 seconds)

Estimated memory usage: 8 MB