sklearn.manifold.TSNE¶

- class sklearn.manifold.TSNE(n_components=2, perplexity=30.0, early_exaggeration=4.0, learning_rate=1000.0, n_iter=1000, metric='euclidean', init='random', verbose=0, random_state=None)¶

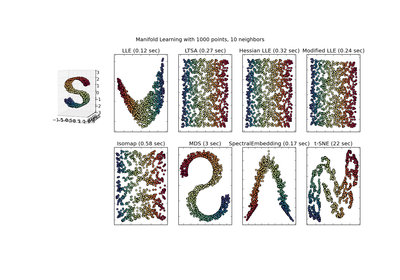

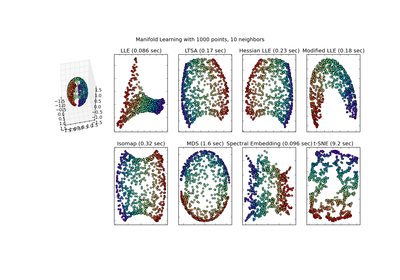

t-distributed Stochastic Neighbor Embedding.

t-SNE [1] is a tool to visualize high-dimensional data. It converts similarities between data points to joint probabilities and tries to minimize the Kullback-Leibler divergence between the joint probabilities of the low-dimensional embedding and the high-dimensional data. t-SNE has a cost function that is not convex, i.e. with different initializations we can get different results.

It is highly recommended to use another dimensionality reduction method (e.g. PCA for dense data or TruncatedSVD for sparse data) to reduce the number of dimensions to a reasonable amount (e.g. 50) if the number of features is very high. This will suppress some noise and speed up the computation of pairwise distances between samples. For more tips see Laurens van der Maaten’s FAQ [2].

Parameters: n_components : int, optional (default: 2)

Dimension of the embedded space.

perplexity : float, optional (default: 30)

The perplexity is related to the number of nearest neighbors that is used in other manifold learning algorithms. Larger datasets usually require a larger perplexity. Consider selcting a value between 5 and 50. The choice is not extremely critical since t-SNE is quite insensitive to this parameter.

early_exaggeration : float, optional (default: 4.0)

Controls how tight natural clusters in the original space are in the embedded space and how much space will be between them. For larger values, the space between natural clusters will be larger in the embedded space. Again, the choice of this parameter is not very critical. If the cost function increases during initial optimization, the early exaggeration factor or the learning rate might be too high.

learning_rate : float, optional (default: 1000)

The learning rate can be a critical parameter. It should be between 100 and 1000. If the cost function increases during initial optimization, the early exaggeration factor or the learning rate might be too high. If the cost function gets stuck in a bad local minimum increasing the learning rate helps sometimes.

n_iter : int, optional (default: 1000)

Maximum number of iterations for the optimization. Should be at least 200.

metric : string or callable, (default: “euclidean”)

The metric to use when calculating distance between instances in a feature array. If metric is a string, it must be one of the options allowed by scipy.spatial.distance.pdist for its metric parameter, or a metric listed in pairwise.PAIRWISE_DISTANCE_FUNCTIONS. If metric is “precomputed”, X is assumed to be a distance matrix. Alternatively, if metric is a callable function, it is called on each pair of instances (rows) and the resulting value recorded. The callable should take two arrays from X as input and return a value indicating the distance between them.

init : string, optional (default: “random”)

Initialization of embedding. Possible options are ‘random’ and ‘pca’. PCA initialization cannot be used with precomputed distances and is usually more globally stable than random initialization.

verbose : int, optional (default: 0)

Verbosity level.

random_state : int or RandomState instance or None (default)

Pseudo Random Number generator seed control. If None, use the numpy.random singleton. Note that different initializations might result in different local minima of the cost function.

Attributes: `embedding_` : array-like, shape (n_samples, n_components)

Stores the embedding vectors.

`training_data_` : array-like, shape (n_samples, n_features)

Stores the training data.

References

- [1] van der Maaten, L.J.P.; Hinton, G.E. Visualizing High-Dimensional Data

- Using t-SNE. Journal of Machine Learning Research 9:2579-2605, 2008.

- [2] van der Maaten, L.J.P. t-Distributed Stochastic Neighbor Embedding

- http://homepage.tudelft.nl/19j49/t-SNE.html

Examples

>>> import numpy as np >>> from sklearn.manifold import TSNE >>> X = np.array([[0, 0, 0], [0, 1, 1], [1, 0, 1], [1, 1, 1]]) >>> model = TSNE(n_components=2, random_state=0) >>> model.fit_transform(X) array([[ 887.28..., 238.61...], [ -714.79..., 3243.34...], [ 957.30..., -2505.78...], [-1130.28..., -974.78...])

Methods

fit_transform(X) Transform X to the embedded space. get_params([deep]) Get parameters for this estimator. set_params(**params) Set the parameters of this estimator. - __init__(n_components=2, perplexity=30.0, early_exaggeration=4.0, learning_rate=1000.0, n_iter=1000, metric='euclidean', init='random', verbose=0, random_state=None)¶

- fit_transform(X)¶

Transform X to the embedded space.

Parameters: X : array, shape (n_samples, n_features) or (n_samples, n_samples)

If the metric is ‘precomputed’ X must be a square distance matrix. Otherwise it contains a sample per row.

Returns: X_new : array, shape (n_samples, n_components)

Embedding of the training data in low-dimensional space.

- get_params(deep=True)¶

Get parameters for this estimator.

Parameters: deep: boolean, optional :

If True, will return the parameters for this estimator and contained subobjects that are estimators.

Returns: params : mapping of string to any

Parameter names mapped to their values.

- set_params(**params)¶

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as pipelines). The former have parameters of the form <component>__<parameter> so that it’s possible to update each component of a nested object.

Returns: self :