sklearn.preprocessing.Normalizer¶

- class sklearn.preprocessing.Normalizer(norm='l2', *, copy=True)[source]¶

Normalize samples individually to unit norm.

Each sample (i.e. each row of the data matrix) with at least one non zero component is rescaled independently of other samples so that its norm (l1, l2 or inf) equals one.

This transformer is able to work both with dense numpy arrays and scipy.sparse matrix (use CSR format if you want to avoid the burden of a copy / conversion).

Scaling inputs to unit norms is a common operation for text classification or clustering for instance. For instance the dot product of two l2-normalized TF-IDF vectors is the cosine similarity of the vectors and is the base similarity metric for the Vector Space Model commonly used by the Information Retrieval community.

Read more in the User Guide.

- Parameters:

- norm{‘l1’, ‘l2’, ‘max’}, default=’l2’

The norm to use to normalize each non zero sample. If norm=’max’ is used, values will be rescaled by the maximum of the absolute values.

- copybool, default=True

Set to False to perform inplace row normalization and avoid a copy (if the input is already a numpy array or a scipy.sparse CSR matrix).

- Attributes:

See also

normalizeEquivalent function without the estimator API.

Notes

This estimator is stateless (besides constructor parameters), the fit method does nothing but is useful when used in a pipeline.

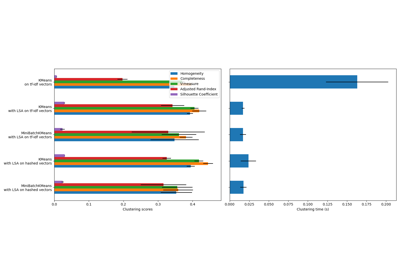

For a comparison of the different scalers, transformers, and normalizers, see examples/preprocessing/plot_all_scaling.py.

Examples

>>> from sklearn.preprocessing import Normalizer >>> X = [[4, 1, 2, 2], ... [1, 3, 9, 3], ... [5, 7, 5, 1]] >>> transformer = Normalizer().fit(X) # fit does nothing. >>> transformer Normalizer() >>> transformer.transform(X) array([[0.8, 0.2, 0.4, 0.4], [0.1, 0.3, 0.9, 0.3], [0.5, 0.7, 0.5, 0.1]])

Methods

fit(X[, y])Do nothing and return the estimator unchanged.

fit_transform(X[, y])Fit to data, then transform it.

get_feature_names_out([input_features])Get output feature names for transformation.

get_params([deep])Get parameters for this estimator.

set_params(**params)Set the parameters of this estimator.

transform(X[, copy])Scale each non zero row of X to unit norm.

- fit(X, y=None)[source]¶

Do nothing and return the estimator unchanged.

This method is just there to implement the usual API and hence work in pipelines.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

The data to estimate the normalization parameters.

- yIgnored

Not used, present here for API consistency by convention.

- Returns:

- selfobject

Fitted transformer.

- fit_transform(X, y=None, **fit_params)[source]¶

Fit to data, then transform it.

Fits transformer to

Xandywith optional parametersfit_paramsand returns a transformed version ofX.- Parameters:

- Xarray-like of shape (n_samples, n_features)

Input samples.

- yarray-like of shape (n_samples,) or (n_samples, n_outputs), default=None

Target values (None for unsupervised transformations).

- **fit_paramsdict

Additional fit parameters.

- Returns:

- X_newndarray array of shape (n_samples, n_features_new)

Transformed array.

- get_feature_names_out(input_features=None)[source]¶

Get output feature names for transformation.

- Parameters:

- input_featuresarray-like of str or None, default=None

Input features.

If

input_featuresisNone, thenfeature_names_in_is used as feature names in. Iffeature_names_in_is not defined, then the following input feature names are generated:["x0", "x1", ..., "x(n_features_in_ - 1)"].If

input_featuresis an array-like, theninput_featuresmust matchfeature_names_in_iffeature_names_in_is defined.

- Returns:

- feature_names_outndarray of str objects

Same as input features.

- get_params(deep=True)[source]¶

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- set_params(**params)[source]¶

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.

- transform(X, copy=None)[source]¶

Scale each non zero row of X to unit norm.

- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_features)

The data to normalize, row by row. scipy.sparse matrices should be in CSR format to avoid an un-necessary copy.

- copybool, default=None

Copy the input X or not.

- Returns:

- X_tr{ndarray, sparse matrix} of shape (n_samples, n_features)

Transformed array.

Examples using sklearn.preprocessing.Normalizer¶

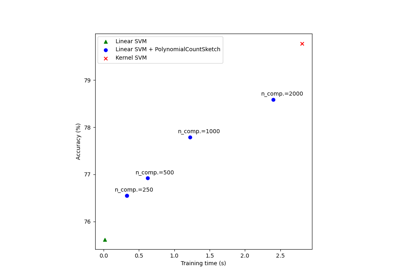

Scalable learning with polynomial kernel approximation

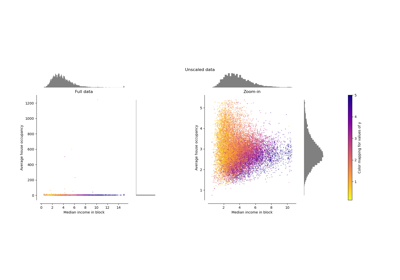

Compare the effect of different scalers on data with outliers