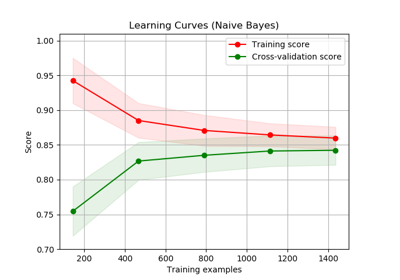

sklearn.model_selection.learning_curve¶

-

sklearn.model_selection.learning_curve(estimator, X, y, groups=None, train_sizes=array([0.1, 0.33, 0.55, 0.78, 1. ]), cv='warn', scoring=None, exploit_incremental_learning=False, n_jobs=None, pre_dispatch='all', verbose=0, shuffle=False, random_state=None, error_score='raise-deprecating')[source]¶ Learning curve.

Determines cross-validated training and test scores for different training set sizes.

A cross-validation generator splits the whole dataset k times in training and test data. Subsets of the training set with varying sizes will be used to train the estimator and a score for each training subset size and the test set will be computed. Afterwards, the scores will be averaged over all k runs for each training subset size.

Read more in the User Guide.

Parameters: - estimator : object type that implements the “fit” and “predict” methods

An object of that type which is cloned for each validation.

- X : array-like, shape (n_samples, n_features)

Training vector, where n_samples is the number of samples and n_features is the number of features.

- y : array-like, shape (n_samples) or (n_samples, n_features), optional

Target relative to X for classification or regression; None for unsupervised learning.

- groups : array-like, with shape (n_samples,), optional

Group labels for the samples used while splitting the dataset into train/test set. Only used in conjunction with a “Group” cv instance (e.g., GroupKFold).

- train_sizes : array-like, shape (n_ticks,), dtype float or int

Relative or absolute numbers of training examples that will be used to generate the learning curve. If the dtype is float, it is regarded as a fraction of the maximum size of the training set (that is determined by the selected validation method), i.e. it has to be within (0, 1]. Otherwise it is interpreted as absolute sizes of the training sets. Note that for classification the number of samples usually have to be big enough to contain at least one sample from each class. (default: np.linspace(0.1, 1.0, 5))

- cv : int, cross-validation generator or an iterable, optional

Determines the cross-validation splitting strategy. Possible inputs for cv are:

- None, to use the default 3-fold cross validation,

- integer, to specify the number of folds in a (Stratified)KFold,

- CV splitter,

- An iterable yielding (train, test) splits as arrays of indices.

For integer/None inputs, if the estimator is a classifier and

yis either binary or multiclass,StratifiedKFoldis used. In all other cases,KFoldis used.Refer User Guide for the various cross-validation strategies that can be used here.

Changed in version 0.20:

cvdefault value if None will change from 3-fold to 5-fold in v0.22.- scoring : string, callable or None, optional, default: None

A string (see model evaluation documentation) or a scorer callable object / function with signature

scorer(estimator, X, y).- exploit_incremental_learning : boolean, optional, default: False

If the estimator supports incremental learning, this will be used to speed up fitting for different training set sizes.

- n_jobs : int or None, optional (default=None)

Number of jobs to run in parallel.

Nonemeans 1 unless in ajoblib.parallel_backendcontext.-1means using all processors. See Glossary for more details.- pre_dispatch : integer or string, optional

Number of predispatched jobs for parallel execution (default is all). The option can reduce the allocated memory. The string can be an expression like ‘2*n_jobs’.

- verbose : integer, optional

Controls the verbosity: the higher, the more messages.

- shuffle : boolean, optional

Whether to shuffle training data before taking prefixes of it based on``train_sizes``.

- random_state : int, RandomState instance or None, optional (default=None)

If int, random_state is the seed used by the random number generator; If RandomState instance, random_state is the random number generator; If None, the random number generator is the RandomState instance used by np.random. Used when

shuffleis True.- error_score : ‘raise’ | ‘raise-deprecating’ or numeric

Value to assign to the score if an error occurs in estimator fitting. If set to ‘raise’, the error is raised. If set to ‘raise-deprecating’, a FutureWarning is printed before the error is raised. If a numeric value is given, FitFailedWarning is raised. This parameter does not affect the refit step, which will always raise the error. Default is ‘raise-deprecating’ but from version 0.22 it will change to np.nan.

Returns: - train_sizes_abs : array, shape (n_unique_ticks,), dtype int

Numbers of training examples that has been used to generate the learning curve. Note that the number of ticks might be less than n_ticks because duplicate entries will be removed.

- train_scores : array, shape (n_ticks, n_cv_folds)

Scores on training sets.

- test_scores : array, shape (n_ticks, n_cv_folds)

Scores on test set.

Notes