SparseRandomProjection#

- class sklearn.random_projection.SparseRandomProjection(n_components='auto', *, density='auto', eps=0.1, dense_output=False, compute_inverse_components=False, random_state=None)[source]#

Reduce dimensionality through sparse random projection.

Sparse random matrix is an alternative to dense random projection matrix that guarantees similar embedding quality while being much more memory efficient and allowing faster computation of the projected data.

If we note

s = 1 / densitythe components of the random matrix are drawn from:-sqrt(s) / sqrt(n_components) with probability 1 / 2s 0 with probability 1 - 1 / s +sqrt(s) / sqrt(n_components) with probability 1 / 2s

Read more in the User Guide.

Added in version 0.13.

- Parameters:

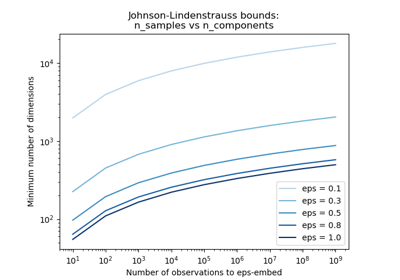

- n_componentsint or ‘auto’, default=’auto’

Dimensionality of the target projection space.

n_components can be automatically adjusted according to the number of samples in the dataset and the bound given by the Johnson-Lindenstrauss lemma. In that case the quality of the embedding is controlled by the

epsparameter.It should be noted that Johnson-Lindenstrauss lemma can yield very conservative estimated of the required number of components as it makes no assumption on the structure of the dataset.

- densityfloat or ‘auto’, default=’auto’

Ratio in the range (0, 1] of non-zero component in the random projection matrix.

If density = ‘auto’, the value is set to the minimum density as recommended by Ping Li et al.: 1 / sqrt(n_features).

Use density = 1 / 3.0 if you want to reproduce the results from Achlioptas, 2001.

- epsfloat, default=0.1

Parameter to control the quality of the embedding according to the Johnson-Lindenstrauss lemma when n_components is set to ‘auto’. This value should be strictly positive.

Smaller values lead to better embedding and higher number of dimensions (n_components) in the target projection space.

- dense_outputbool, default=False

If True, ensure that the output of the random projection is a dense numpy array even if the input and random projection matrix are both sparse. In practice, if the number of components is small the number of zero components in the projected data will be very small and it will be more CPU and memory efficient to use a dense representation.

If False, the projected data uses a sparse representation if the input is sparse.

- compute_inverse_componentsbool, default=False

Learn the inverse transform by computing the pseudo-inverse of the components during fit. Note that the pseudo-inverse is always a dense array, even if the training data was sparse. This means that it might be necessary to call

inverse_transformon a small batch of samples at a time to avoid exhausting the available memory on the host. Moreover, computing the pseudo-inverse does not scale well to large matrices.- random_stateint, RandomState instance or None, default=None

Controls the pseudo random number generator used to generate the projection matrix at fit time. Pass an int for reproducible output across multiple function calls. See Glossary.

- Attributes:

- n_components_int

Concrete number of components computed when n_components=”auto”.

- components_sparse matrix of shape (n_components, n_features)

Random matrix used for the projection. Sparse matrix will be of CSR format.

- inverse_components_ndarray of shape (n_features, n_components)

Pseudo-inverse of the components, only computed if

compute_inverse_componentsis True.Added in version 1.1.

- density_float in range 0.0 - 1.0

Concrete density computed from when density = “auto”.

- n_features_in_int

Number of features seen during fit.

Added in version 0.24.

- feature_names_in_ndarray of shape (

n_features_in_,) Names of features seen during fit. Defined only when

Xhas feature names that are all strings.Added in version 1.0.

See also

GaussianRandomProjectionReduce dimensionality through Gaussian random projection.

References

[1]Ping Li, T. Hastie and K. W. Church, 2006, “Very Sparse Random Projections”. https://web.stanford.edu/~hastie/Papers/Ping/KDD06_rp.pdf

[2]D. Achlioptas, 2001, “Database-friendly random projections”, https://cgi.di.uoa.gr/~optas/papers/jl.pdf

Examples

>>> import numpy as np >>> from sklearn.random_projection import SparseRandomProjection >>> rng = np.random.RandomState(42) >>> X = rng.rand(25, 3000) >>> transformer = SparseRandomProjection(random_state=rng) >>> X_new = transformer.fit_transform(X) >>> X_new.shape (25, 2759) >>> # very few components are non-zero >>> np.mean(transformer.components_ != 0) np.float64(0.0182)

- fit(X, y=None)[source]#

Generate a sparse random projection matrix.

- Parameters:

- X{ndarray, sparse matrix} of shape (n_samples, n_features)

Training set: only the shape is used to find optimal random matrix dimensions based on the theory referenced in the afore mentioned papers.

- yIgnored

Not used, present here for API consistency by convention.

- Returns:

- selfobject

BaseRandomProjection class instance.

- fit_transform(X, y=None, **fit_params)[source]#

Fit to data, then transform it.

Fits transformer to

Xandywith optional parametersfit_paramsand returns a transformed version ofX.- Parameters:

- Xarray-like of shape (n_samples, n_features)

Input samples.

- yarray-like of shape (n_samples,) or (n_samples, n_outputs), default=None

Target values (None for unsupervised transformations).

- **fit_paramsdict

Additional fit parameters. Pass only if the estimator accepts additional params in its

fitmethod.

- Returns:

- X_newndarray array of shape (n_samples, n_features_new)

Transformed array.

- get_feature_names_out(input_features=None)[source]#

Get output feature names for transformation.

The feature names out will prefixed by the lowercased class name. For example, if the transformer outputs 3 features, then the feature names out are:

["class_name0", "class_name1", "class_name2"].- Parameters:

- input_featuresarray-like of str or None, default=None

Only used to validate feature names with the names seen in

fit.

- Returns:

- feature_names_outndarray of str objects

Transformed feature names.

- get_metadata_routing()[source]#

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

- routingMetadataRequest

A

MetadataRequestencapsulating routing information.

- get_params(deep=True)[source]#

Get parameters for this estimator.

- Parameters:

- deepbool, default=True

If True, will return the parameters for this estimator and contained subobjects that are estimators.

- Returns:

- paramsdict

Parameter names mapped to their values.

- inverse_transform(X)[source]#

Project data back to its original space.

Returns an array X_original whose transform would be X. Note that even if X is sparse, X_original is dense: this may use a lot of RAM.

If

compute_inverse_componentsis False, the inverse of the components is computed during each call toinverse_transformwhich can be costly.- Parameters:

- X{array-like, sparse matrix} of shape (n_samples, n_components)

Data to be transformed back.

- Returns:

- X_originalndarray of shape (n_samples, n_features)

Reconstructed data.

- set_output(*, transform=None)[source]#

Set output container.

See Introducing the set_output API for an example on how to use the API.

- Parameters:

- transform{“default”, “pandas”, “polars”}, default=None

Configure output of

transformandfit_transform."default": Default output format of a transformer"pandas": DataFrame output"polars": Polars outputNone: Transform configuration is unchanged

Added in version 1.4:

"polars"option was added.

- Returns:

- selfestimator instance

Estimator instance.

- set_params(**params)[source]#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

- **paramsdict

Estimator parameters.

- Returns:

- selfestimator instance

Estimator instance.

- transform(X)[source]#

Project the data by using matrix product with the random matrix.

- Parameters:

- X{ndarray, sparse matrix} of shape (n_samples, n_features)

The input data to project into a smaller dimensional space.

- Returns:

- X_new{ndarray, sparse matrix} of shape (n_samples, n_components)

Projected array. It is a sparse matrix only when the input is sparse and

dense_output = False.

Gallery examples#

Manifold learning on handwritten digits: Locally Linear Embedding, Isomap…

The Johnson-Lindenstrauss bound for embedding with random projections